Applying the Science of Learning Using Tolerance Zones

The engineering concept of Tolerance Zones offers a useful framework for understanding how to effectively implement retrieval practice

In recent decades, cognitive science has yielded remarkable insights into how humans learn, remember, and apply knowledge. However, a persistent challenge remains: translating these laboratory-derived principles into effective classroom practices. Tom Perry’s Review of cognitive science in the classroom highlighted this troubling disconnect, noting that while the science offers robust theoretical frameworks, their implementation in authentic educational settings often falters.

That review proved to be a sort of Rorschach blot in the sense that people read into it what they wanted to read but I was very struck by a subsequent talk Tom gave where he outlined some really insightful and well considered questions (which I took to be a sort of useful algorithm) for any teacher or school leader looking to implement retrieval practice effectively such as where is your error correction built in? is feedback immediate or delayed? and consideration of pupil ability level. Unfortunately though, I think there is an uneven application of the science and in many cases, very effective methods such as retrieval practice are in danger of turning into a lethal mutation.

My own view is that this gap between evidence and practice stems not from flawed science but from insufficient attention to how to actually apply at the point of use. So rather than seeking perfect fidelity to experimental conditions (never achievable anyway), we might instead adopt a tolerance zone approach when applying this in real classrooms.

This framework acknowledges that cognitive science principles can withstand certain adaptations while maintaining their core benefits or ‘structural integrity’. By calibrating interventions to operate within these tolerance zones, by paying close attention to success rates, spacing timings, educators could implement findings from learning science with what might better be termed as a kind of "functional fidelity", ie preserving the essential mechanisms that drive learning while attending to the practical constraints and ecological complexity of real classrooms.

What Are Tolerance Zones?

In engineering, Tolerance Zones refer to the acceptable range within which a component can operate effectively despite variations. Outside these zones, performance degrades or fails entirely. Since achieving exact measurements in manufacturing is unrealistic, tolerances are specified in production drawings to ensure parts remain within acceptable limits while maintaining functionality. How might this be useful for applying cognitive science?

In a recent post I outlined several key findings from research on retrieval practice. The testing effect remains the most evidenced learning strategy in cognitive science, with robust support from laboratory studies showing that actively recalling information strengthens memory more effectively than passive review. However, when applied in classrooms, I am concerned that retrieval practice is fast becoming a lethal mutation and I want to offer this concept of tolerance as a way of thinking about applying these findings in a meaningful way. For most classroom teachers there are a set of challenges which include time constraints, curriculum coverage pressures, and the diverse cognitive needs of students which means that they can be implementing retrieval practice in a less than effective way.

The optimal zone or ‘sweet spot’ for retrieval practice

Applying the tolerance zone framework to retrieval practice suggests there are critical parameters that must be maintained for efficacy, while others allow for adaptation. Core elements within the tolerance zone likely include spacing retrieval attempts appropriately, ensuring sufficient challenge to promote effortful processing, and providing timely feedback to correct misconceptions. Outside these tolerance zones, ie when retrieval is too easy, too difficult, or feedback is absent, the cognitive benefits may diminish substantially or disappear entirely. In other words, it’s a waste of time.

Research consistently demonstrates that to maintain the ‘structural integrity’ of the testing effect or retrieval practice, students must experience the appropriate level of challenge. This sweet spot, where recall is effortful but achievable, represents the optimal operating conditions within our tolerance zone. When implementation shifts too far toward simplicity (mere recognition rather than recall) or excessive difficulty (where retrieval repeatedly fails), we observe significant degradation in learning outcomes and even motivation, indicating we've exceeded the zone's boundaries.

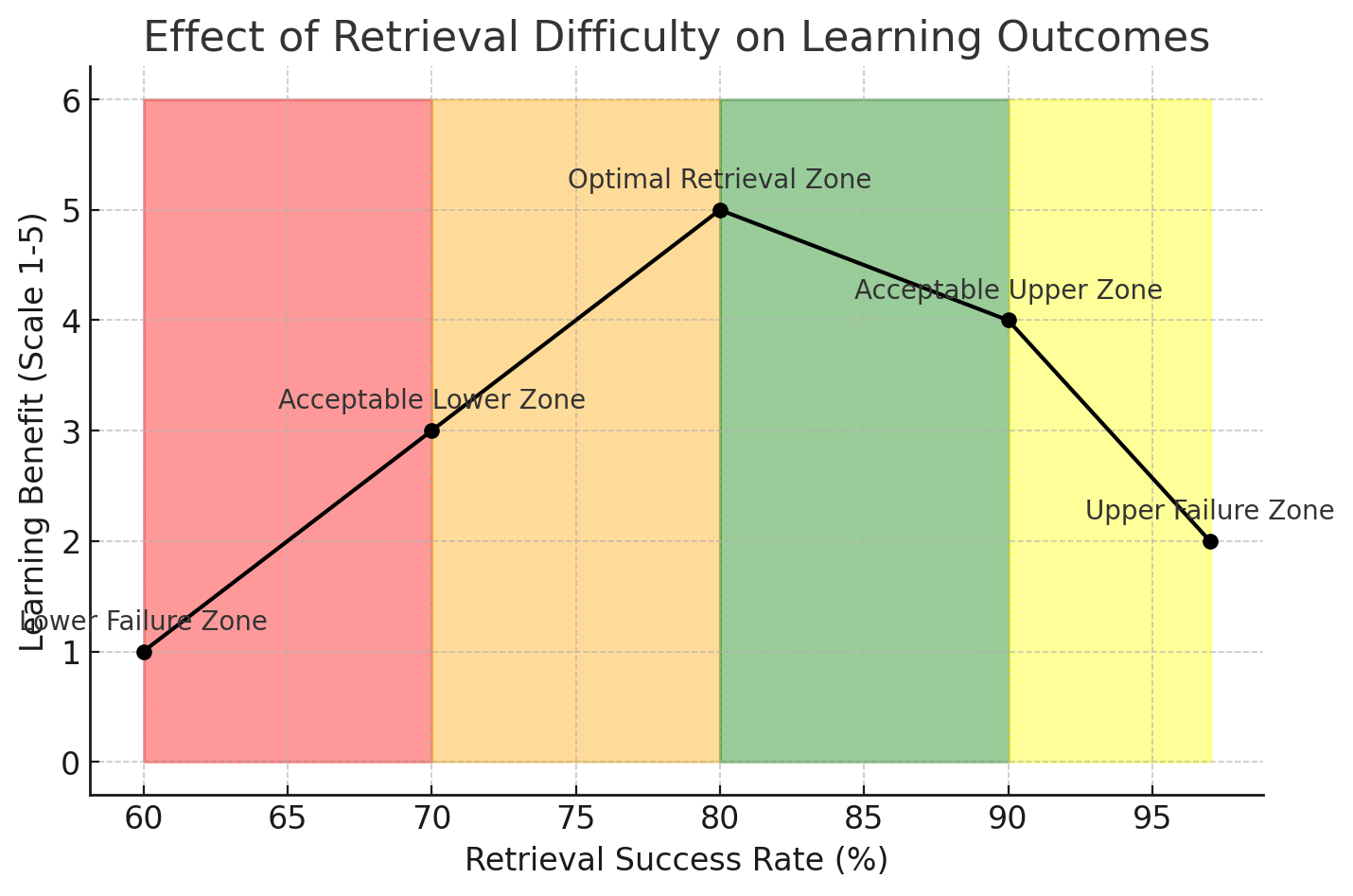

In Rowland's 2014 meta-analysis, the author found support for the idea that there's a relationship between initial test performance and the magnitude of the testing effect. Pyc and Rawson (2009) found that more challenging retrievals, provided they are successful, lead to better long-term retention compared to easier retrievals. They had participants learn Swahili-English word pairs and found that items retrieved with moderate difficulty (requiring some effort but successful retrieval) showed better long-term retention than items that were either too easy or too difficult to retrieve. Their findings suggested that there's an optimal level of retrieval difficulty that maximises learning. So we might consider the following zones for student success rate when engaging in retrieval practice:

Zone 1: Optimal Retrieval Challenge (75-85% Success Rate)

This is where we want to be; the Goldilocks zone. In this ideal operating zone, students experience just the right about of challenge with a high probability of success. This represents the optimal operating conditions for memory formation and learning, where challenge and achievement are perfectly balanced. Like a system functioning within its ideal parameters, this zone maximises memory strengthening while maintaining motivation. Teachers should aim to maintain retrieval success within these upper and lower control limits (75-85%) for core concept mastery, as it provides the most efficient learning environment.

Zone 2: Acceptable Upper Zone (85-95% Success Rate)

This zone is approaching too easy. This zone represents retrieval practice approaching the upper degradation threshold, in other words, still functional but operating with reduced effect. While the system remains within acceptable control limits, it begins to lose efficiency as tasks become too easy to trigger optimal memory consolidation. This zone still provides value for confidence building and reinforcing well-learned material, making it suitable for review sessions and building momentum in learning sequences.

Zone 3: Acceptable Lower Zone (65-75% Success Rate)

This zone is approaching too hard. Operating just below the optimal zone but still above the lower degradation threshold, this zone presents more challenging retrieval that pushes students' capacity limits. Like a system functioning under increased stress while remaining within acceptable control limits, this zone can enhance motivation for high-performing students who benefit from greater challenge but will demotivate many students also. Teachers should use this zone selectively and monitor performance carefully as it approaches the lower boundary of acceptable function.

Zone 4: Upper Failure Zone (>95% Success Rate)

Way too easy. Beyond the upper degradation threshold lies this system failure point where retrieval practice becomes so easy it requires minimal cognitive effort. The system operates far below capacity, resulting in inefficient learning with little memory strengthening or meaningful benefit. This represents a failure mode where the lack of challenge prevents the retrieval mechanisms that drive learning from engaging properly. Teachers should recognize when activities cross this threshold and adjust difficulty accordingly.

Zone 5: Lower Failure Zone (<65% Success Rate)

Way too hard. This zone represents operation beyond the lower degradation threshold and into a critical system failure point. When retrieval challenges are so difficult that most students consistently fail, the cognitive system experiences the equivalent of mechanical failure, producing frustration, reinforcement of errors, and student disengagement rather than learning. Teachers should immediately recognise when class performance falls below this control limit and implement corrective measures through additional instruction or scaffolding.

The key consideration when setting these educational tolerance zones mirrors that of engineering: to determine how wide the tolerances may be without affecting other factors or the outcome of a process. Just as manufacturing recognises that no machine can hold dimensions precisely to the nominal value, no classroom implementation can perfectly mirror laboratory conditions. The challenge becomes identifying which variations are acceptable and which render an intervention "noncompliant" or ineffective. So how might this play out in an actual classroom?

Practical Classroom Implementation Using Tolerance Zones

During a curriculum planning phase, teachers (or controversially, curriculum designers) could identify key concepts that require strong retention and flag them for zone-optimised retrieval practice. School assessment calendars can incorporate brief zone diagnostic activities before unit assessments, with results feeding into both summative data and future lesson adjustments. Many schools already use formative assessment systems that can be calibrated to report success rates within the tolerance zone framework. Exit ticket routines, digital quiz platforms, and traditional homework reviews can be adapted to track performance within these zones without adding extra workload for teachers.

AI-driven adaptive learning systems will inevitably be very useful here and could continuously analyse student performance data to determine where each student falls within the zones, automatically calibrating retrieval practice difficulty to maintain the optimal 75-85% success rate. Traditional methods require manual tracking of success rates across multiple attempts. Adaptive AI systems can automate this by analysing response patterns, speed, and confidence to adjust retrieval difficulty in real-time.

Concrete Examples Across Zones

Consider a secondary (high school) chemistry unit on periodic table elements.

Zone 5 (<65%): "Explain how the atomic radius trends relate to ionization energy across the periodic table" (too conceptually demanding without scaffolding)

Zone 3 (65-75%): "Which other elements share similar chemical properties with magnesium? Explain briefly."

Zone 1 (75-85%): "Describe the relationship between an element's position on the periodic table and its electron configuration"

Zone 2 (85-95%): "Which group contains elements with one valence electron?" (with multiple similar elements to choose from)

Zone 4 (>95%): "Is hydrogen a metal or non-metal?" (overly simplistic recognition)

Adaptive Feedback Strategies

Feedback strategies should adapt to each zone:

Zone 1 (Optimal Zone): Provide brief confirmatory feedback for correct answers and targeted explanations for errors, focusing on reinforcing connections between concepts

Zone 2 (Upper Zone): Use feedback to increase challenge - "Yes, that's correct. Can you also explain why...?" to push students toward more complex understanding

Zone 3 (Lower Zone): If a student struggles to explain ionization energy trends, provide a worked example showing how atomic radius and nuclear charge affect energy levels.

Zone 4 (Upper Failure): Feedback should introduce complexity and connections, transforming simple recognition into more challenging retrieval opportunities

Zone 5 (Lower Failure): Implement immediate corrective feedback with conceptual re-teaching and simpler retrieval opportunities to rebuild confidence before returning to challenging material

The challenges I would anticipate here would be ensuring that retrieval practice remains practically feasible for teachers while maintaining its scientific validity. First, classroom variability presents a significant hurdle. Students differ in prior knowledge, motivation, and cognitive capacity, making it difficult to maintain uniform success rates across a class. Second, teacher workload and assessment logistics could create barriers; while AI-driven solutions could help with adaptive difficulty calibration, many schools lack the resources or infrastructure to implement such technology effectively but I think that is about to change. What this really underlines to me is that we need very specific expertise in curriculum design and planning combined with a sophisticated understanding of cognitive science.

Could this approach work? Let me know in the comments below.

Works Cited

Pyc, M. A., & Rawson, K. A. (2009). Testing the retrieval effort hypothesis: Does greater difficulty correctly recalling information lead to higher levels of memory? Journal of Memory and Language, 60(4), 437–447. https://doi.org/10.1016/j.jml.2009.01.004

Rowland, C. A. (2014). The effect of testing versus restudy on retention: A meta-analytic review of the testing effect. Psychological Bulletin, 140(6), 1432–1463. https://doi.org/10.1037/a0037559

Perry, T. (2021). Cognitive science in the classroom: A review of evidence and implementation. Education Endowment Foundation (EEF).

Further Reading

Agarwal, P. K., & Bain, P. M. (2019). Powerful teaching: Unleash the science of learning. Jossey-Bass.

Bjork, R. A., & Bjork, E. L. (2020). Making things hard on yourself, but in a good way: Creating desirable difficulties to enhance learning. In A. S. Benjamin (Ed.), Successful remembering and successful forgetting: A Festschrift in honor of Robert A. Bjork (pp. 55–72). Psychology Press.

Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., & Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, 14(1), 4–58. https://doi.org/10.1177/1529100612453266

Roediger, H. L., & Butler, A. C. (2011). The critical role of retrieval practice in long-term retention. Trends in Cognitive Sciences, 15(1), 20–27. https://doi.org/10.1016/j.tics.2010.09.003

The engineering framework is pretty interesting for these findings. I suspect (similar to most doctors who were early adopters of electronic logging) that data input is a huge hurdle for most teachers if we’re going to track students retention regularly to make it effective. But I’ve not taught a full classroom, only a few students at once and usually only one.

It would be interesting to figure out how this could support teachers as they gain more experience to make a classroom more engaging. I’ve used Anki with my kids and it quickly loses its appeal and becomes overwhelming. But I also think that’s more than a UI problem, it’s likely a prioritization problem as well.

Do you know anyone trying to implement these ideas well, beyond a surface level “pop quiz” implementation?

Another useful post!

Just clarifying the intended metric. Does "success rate" mean:

- average percentage of students who can answer a question (or set of questions) correctly, or

- (average) percentage of questions answered correctly by a student (or class)

- something else